Classification is an example of a supervised machine learning technique in which you train a model using data that includes both the features and known values for the label, so that the model learns to fit the feature combinations to the label. Then, after training has been completed, you can use the trained model to predict labels for new items for which the label is unknown.

You can use Microsoft Azure Machine Learning designer to create classification models by using a drag and drop visual interface, without needing to write any code.

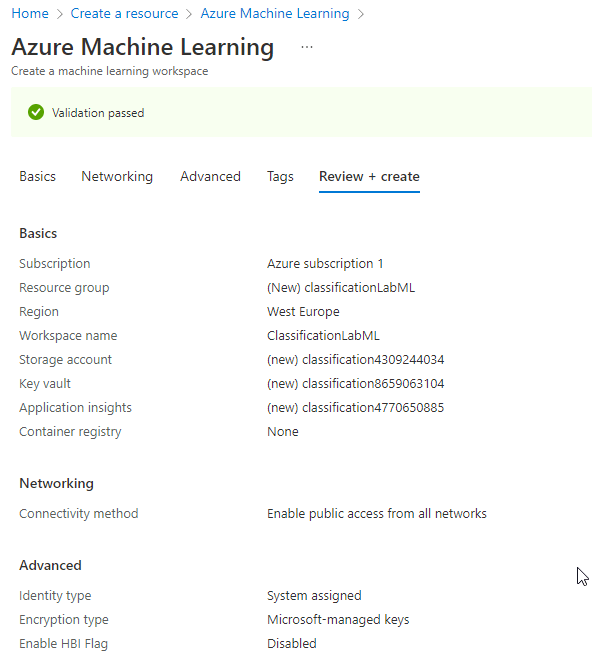

Start by creating an Azure Machine Learning workspace.

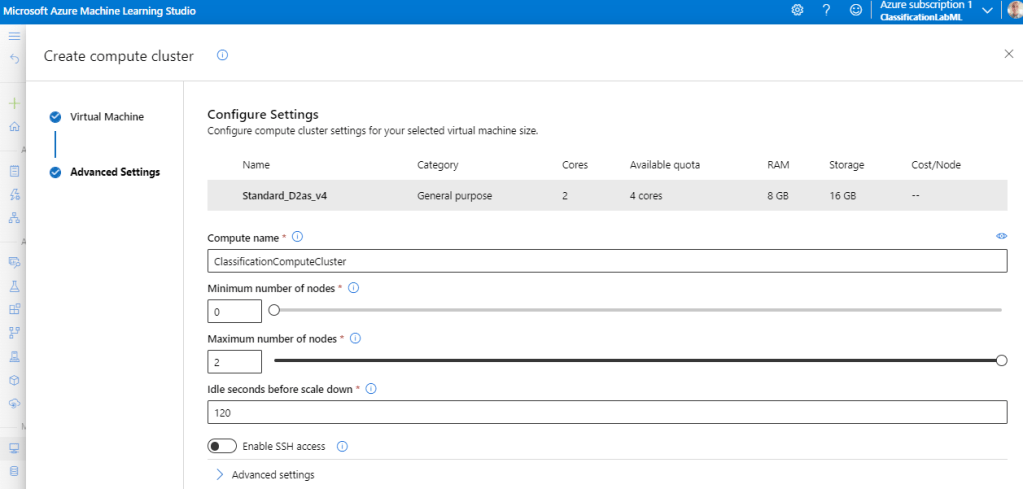

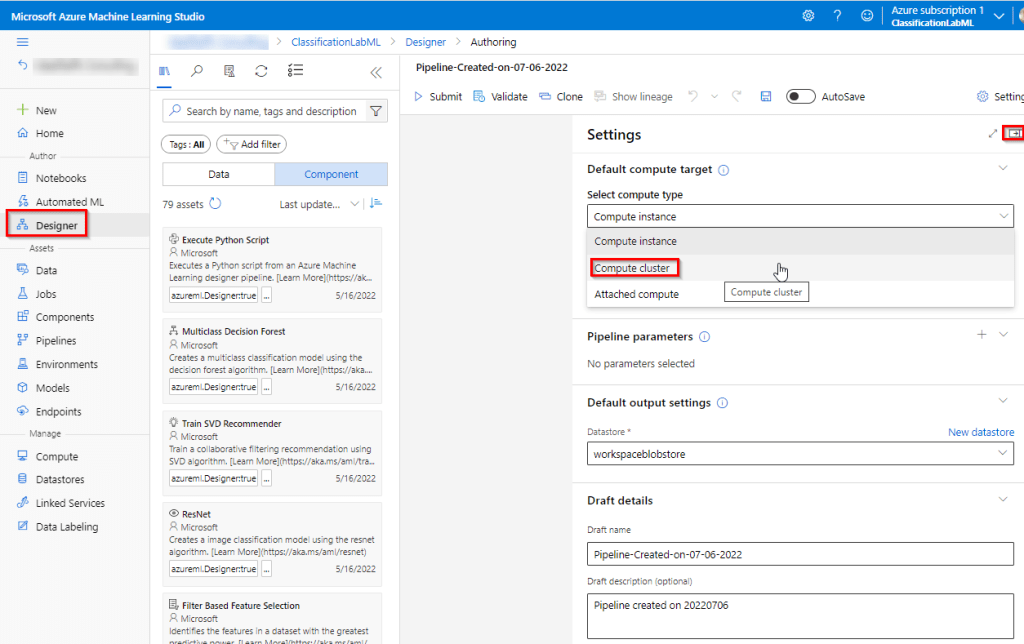

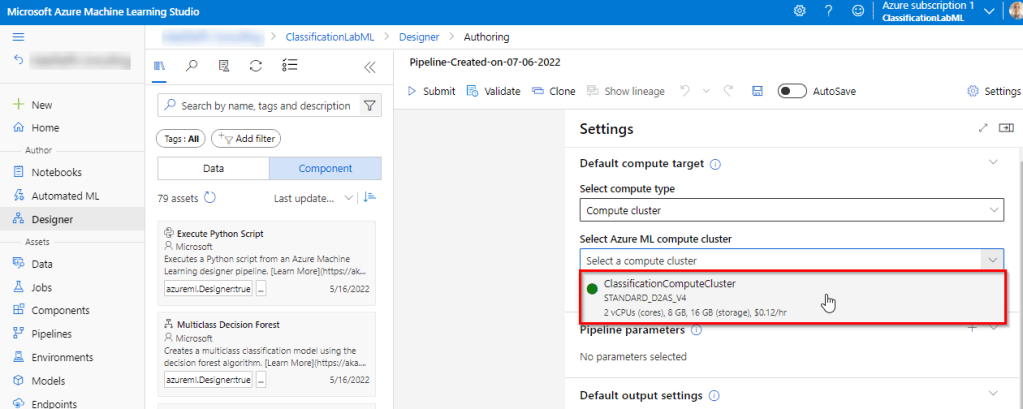

Next, create compute resources. Launch the Azure ML Studio and create a simple 2 node Compute cluster.

With these resources should be enough.

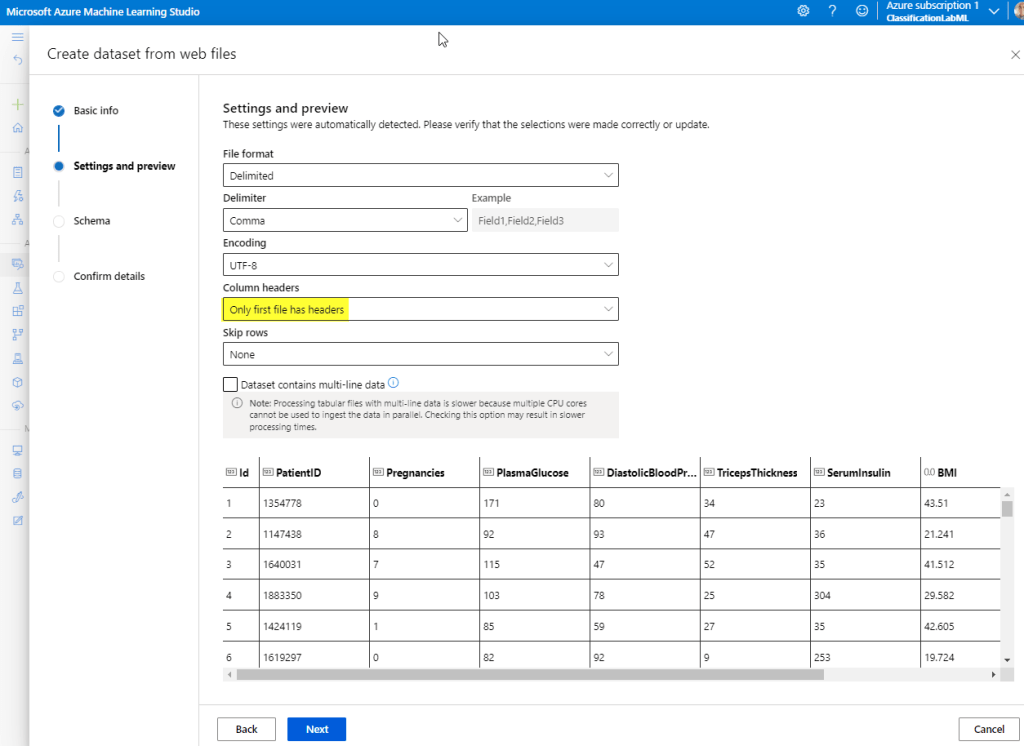

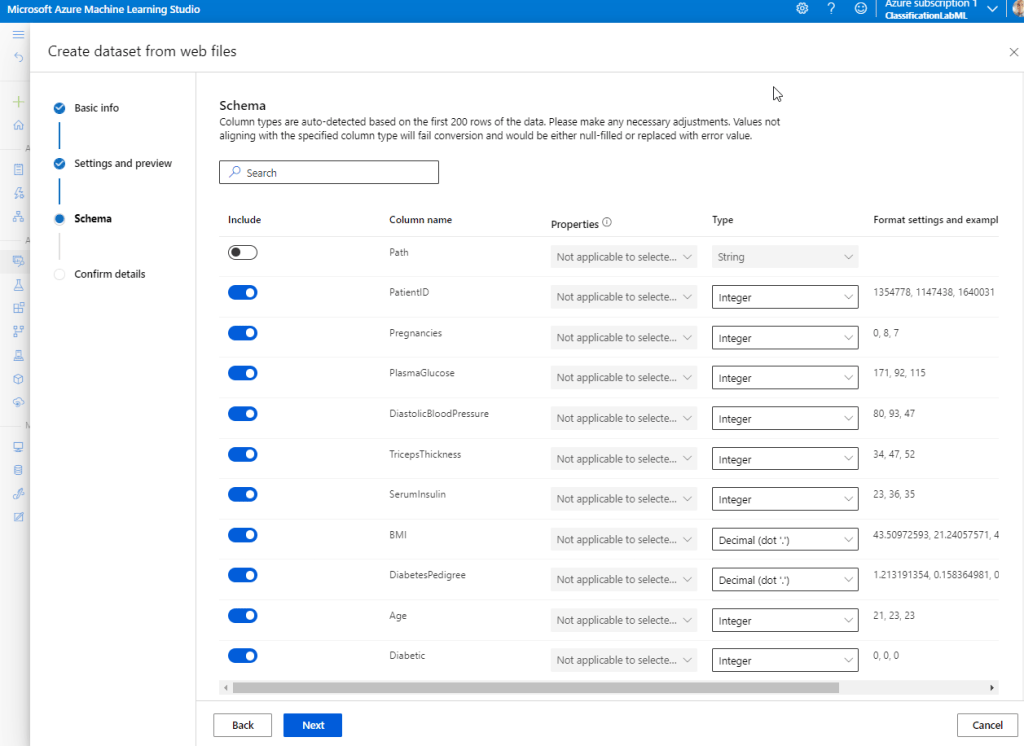

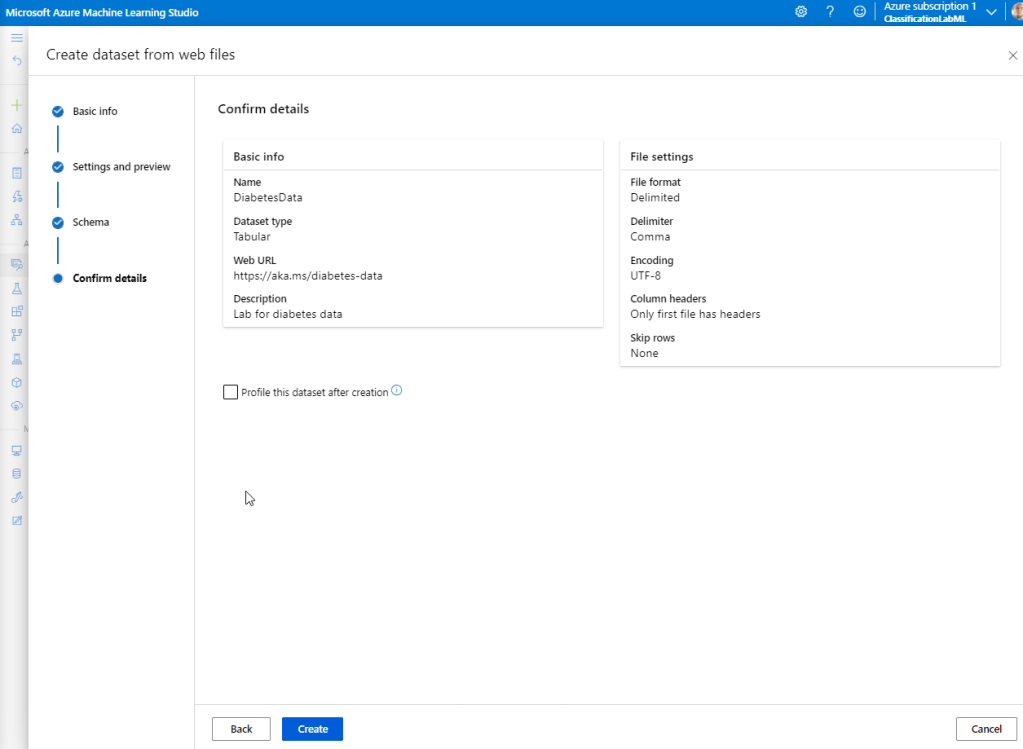

We will use as dataset some web files provided by Microsoft at https://aka.ms/diabetes-data

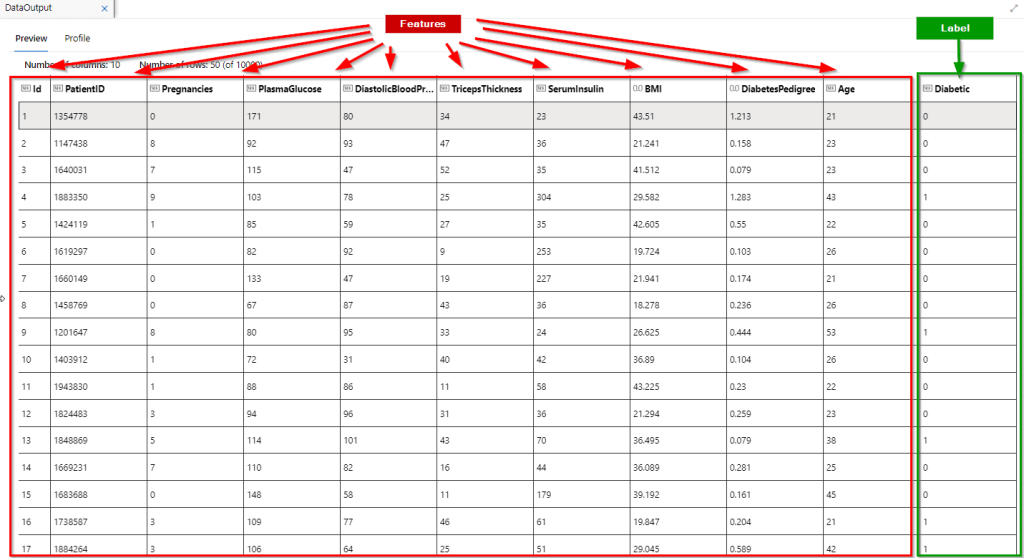

Explore the data that was imported.

Create a pipeline in the Designer.

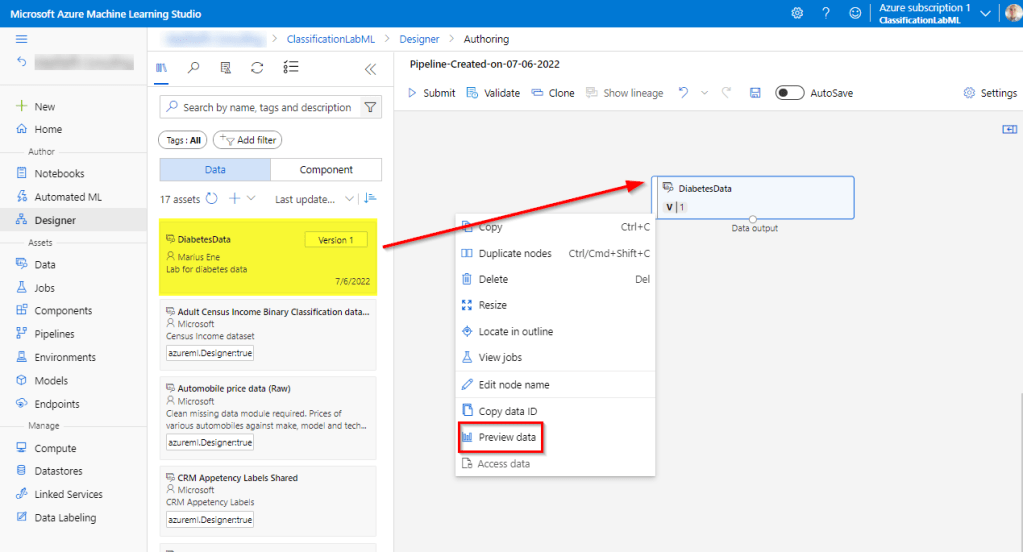

Drag the DiabetesData dataset to the canvas and right click on it to Preview data.

The columns are called features while the last column where we want to find out if the person is a diabetic or not is called a label.

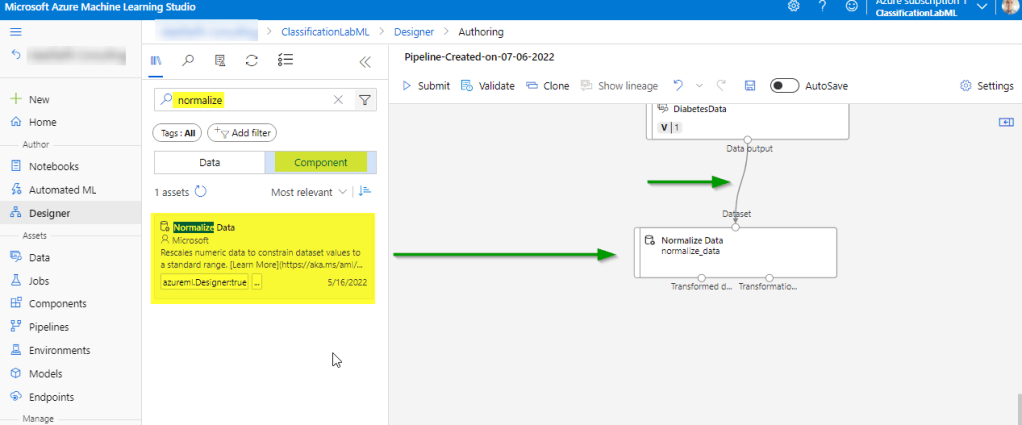

Because the features contain numbers that are on a different scale we need to Normalize the data.

In the Components filter for Normalize Data, then drag it to the canvas and connect that DataSet to it.

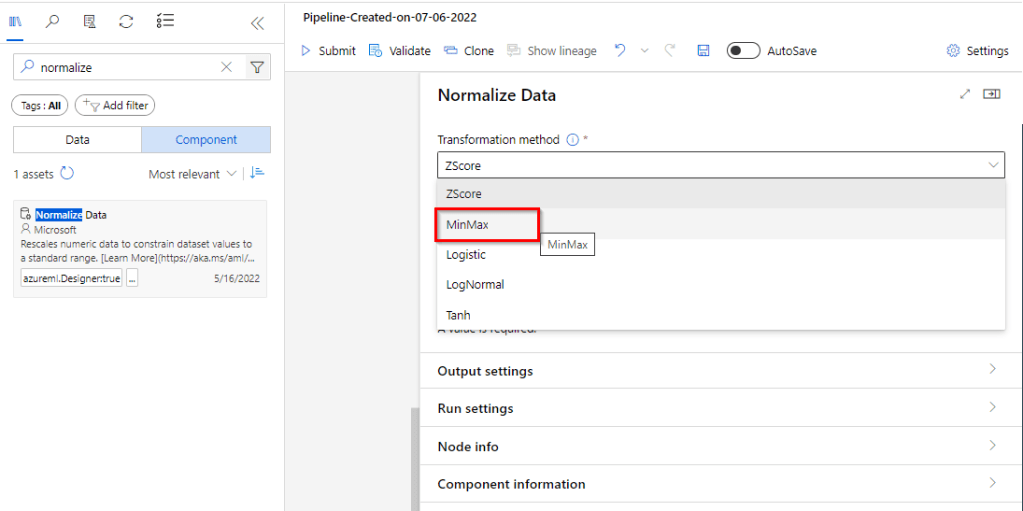

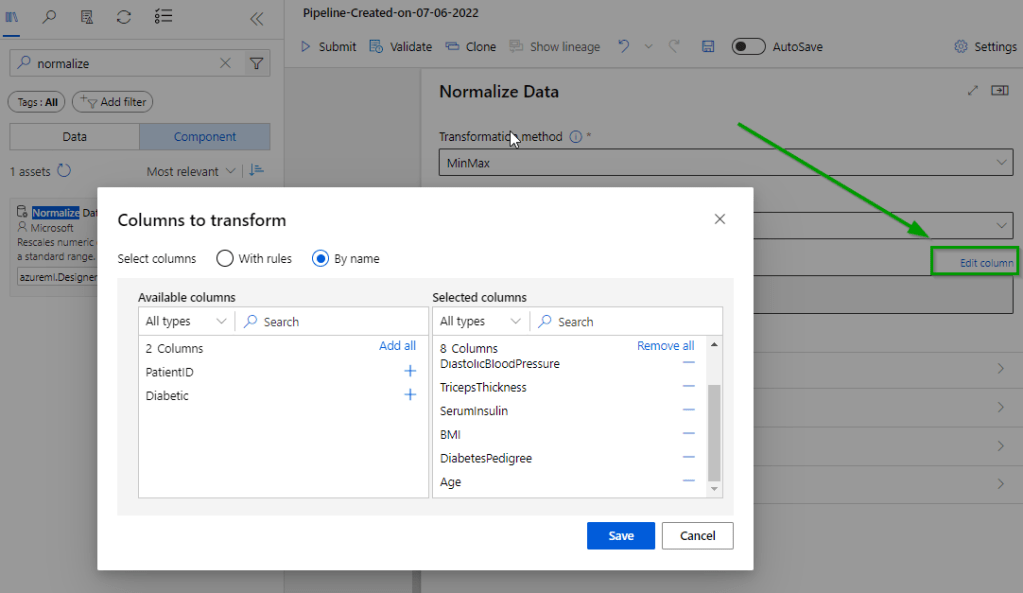

Double click on the Normalize Data module and apply the below configuration:

After this, run the pipeline by selecting Submit.

After it completes, see the job details and the transformed data. The numbers should now be normalized to a common scale.

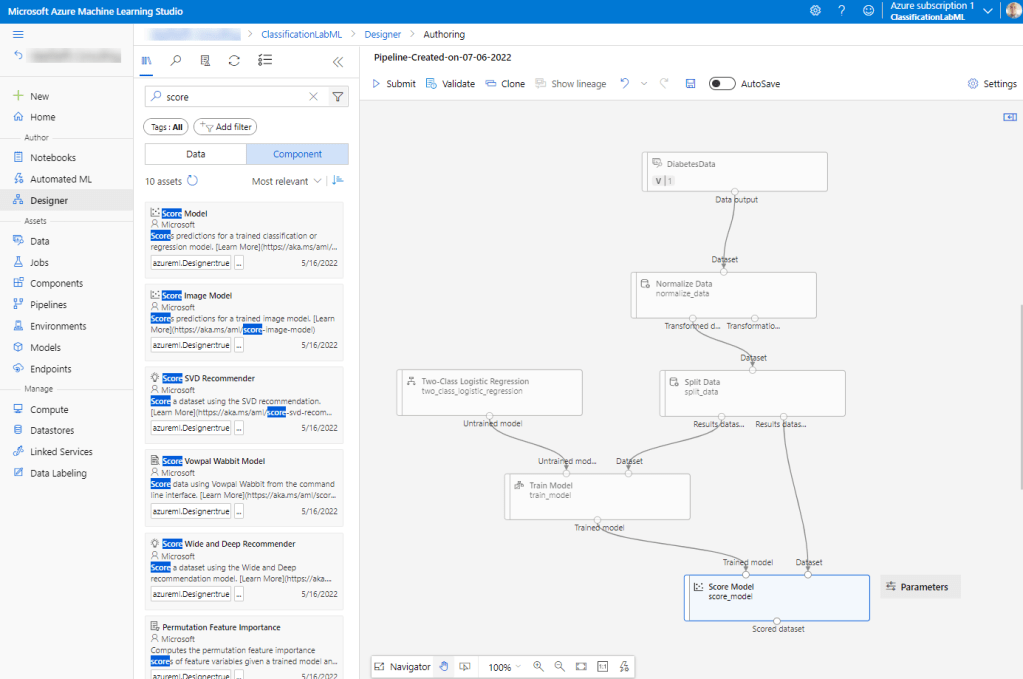

Next add the modules as depicted in the following image and connect them accordingly.

The Diabetic label the model will predict is a class (0 or 1), so we need to train the model using a classification algorithm. Specifically, there are two possible classes, so we need a binary classification algorithm.

Configure the Split Data module as such:

Now we can run the training pipeline by clicking Submit.

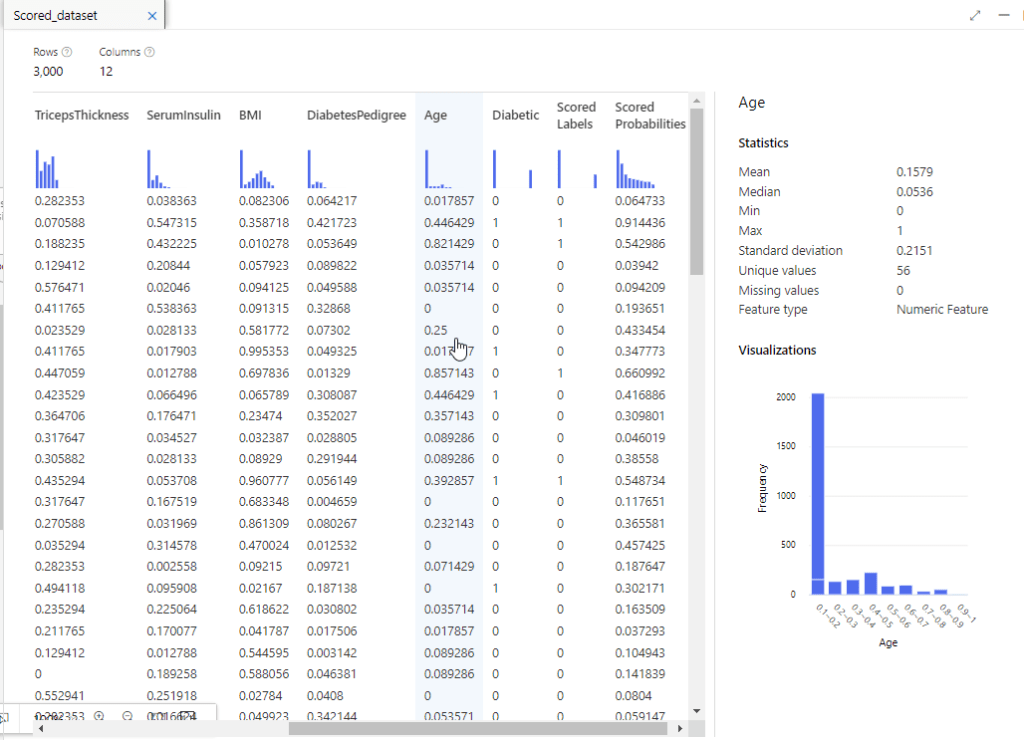

When the experiment run has finished, select Job details. Right-click the Score Model module on the canvas, and click on Preview data. Select Scored dataset to view the results.

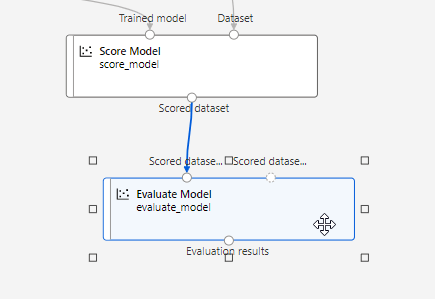

The model is predicting values for the Diabetic label, but how reliable are its predictions? To assess that, you need to evaluate the model. To do this, in the training pipeline we need to and another module to Evaluate Model.

Submit again the pipeline and once finished, right click on the Evaluate Model and see Preview data, Evaluation Results.

Review the metrics to the left of the confusion matrix, which include:

- Accuracy: The ratio of correct predictions (true positives + true negatives) to the total number of predictions. In other words, what proportion of diabetes predictions did the model get right?

- Precision: The fraction of positive cases correctly identified (the number of true positives divided by the number of true positives plus false positives). In other words, out of all the patients that the model predicted as having diabetes, how many are actually diabetic?

- Recall: The fraction of the cases classified as positive that are actually positive (the number of true positives divided by the number of true positives plus false negatives). In other words, out of all the patients who actually have diabetes, how many did the model identify?

- F1 Score: An overall metric that essentially combines precision and recall.

- We’ll return to AUC later.

Of these metric, accuracy is the most intuitive. However, you need to be careful about using simple accuracy as a measurement of how well a model works. Suppose that only 3% of the population is diabetic. You could create a model that always predicts 0 and it would be 97% accurate – just not very useful! For this reason, most data scientists use other metrics like precision and recall to assess classification model performance.

Look above the Threshold slider at the ROC curve (ROC stands for receiver operating characteristic, but most data scientists just call it a ROC curve). Another term for recall is True positive rate, and it has a corresponding metric named False positive rate, which measures the number of negative cases incorrectly identified as positive compared the number of actual negative cases. Plotting these metrics against each other for every possible threshold value between 0 and 1 results in a curve. In an ideal model, the curve would go all the way up the left side and across the top, so that it covers the full area of the chart. The larger the area under the curve (which can be any value from 0 to 1), the better the model is performing – this is the AUC metric listed with the other metrics below. To get an idea of how this area represents the performance of the model, imagine a straight diagonal line from the bottom left to the top right of the ROC chart. This represents the expected performance if you just guessed or flipped a coin for each patient – you could expect to get around half of them right, and half of them wrong, so the area under the diagonal line represents an AUC of 0.5. If the AUC for your model is higher than this for a binary classification model, then the model performs better than a random guess.

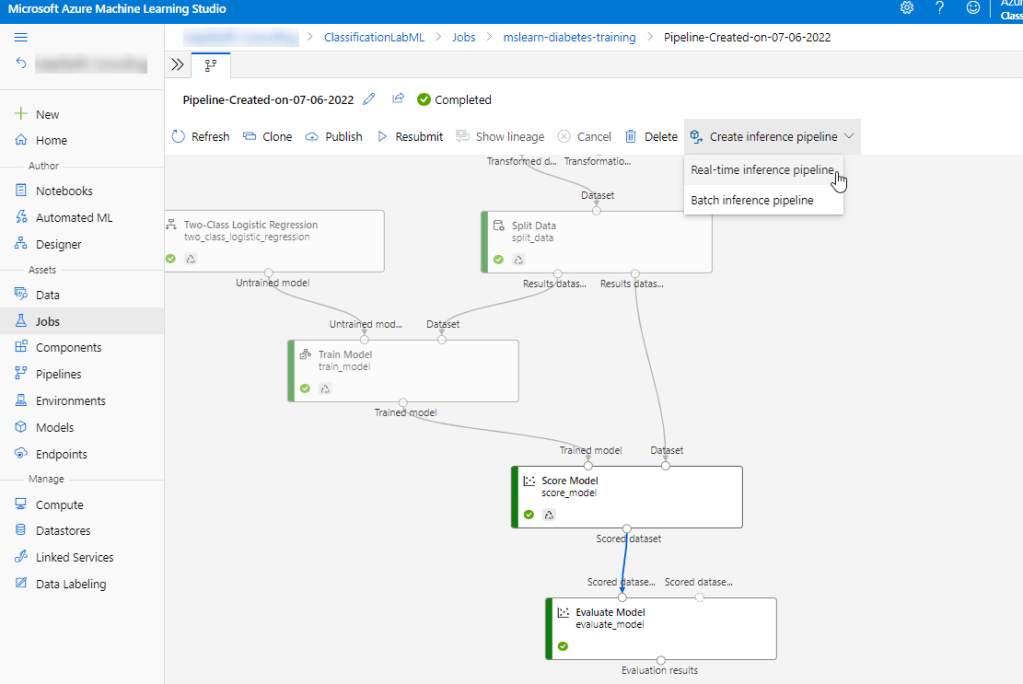

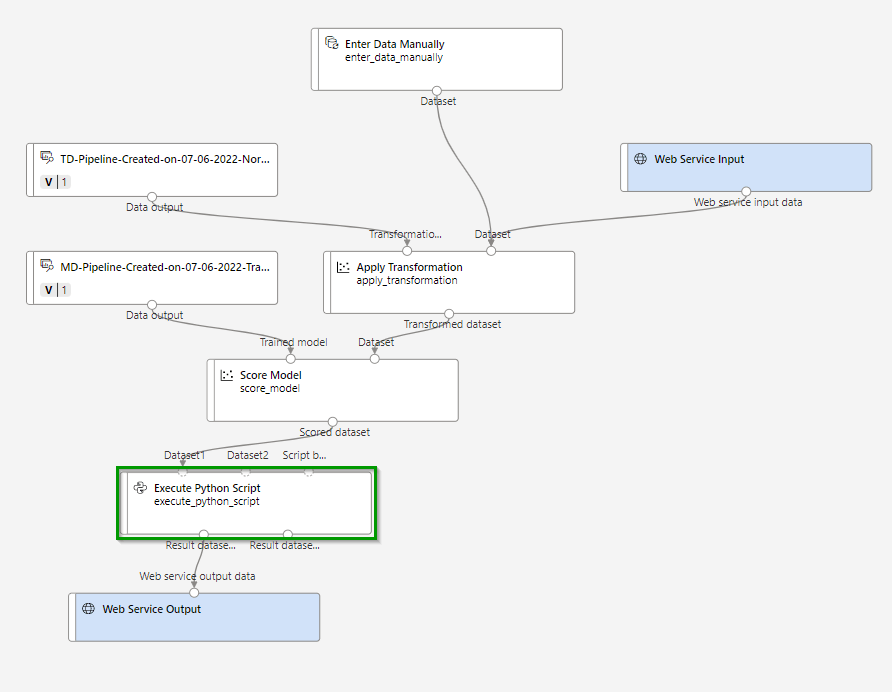

Now we need to actually use the model by creating an inference pipeline.

Settings should be like this. Remove the modules in red.

Add the following module, Enter Data Manually.

Add the following module, Execute Python Script to return only the patient ID, predicted label value, and probability. Here is the python code:

import pandas as pd

def azureml_main(dataframe1 = None, dataframe2 = None):

scored_results = dataframe1[['PatientID', 'Scored Labels', 'Scored Probabilities']]

scored_results.rename(columns={'Scored Labels':'DiabetesPrediction',

'Scored Probabilities':'Probability'},

inplace=True)

return scored_results

In the Enter Data Manually module add the following data.

PatientID,Pregnancies,PlasmaGlucose,DiastolicBloodPressure,TricepsThickness,SerumInsulin,BMI,DiabetesPedigree,Age

1882185,9,104,51,7,24,27.36983156,1.350472047,43

1662484,6,73,61,35,24,18.74367404,1.074147566,75

1228510,4,115,50,29,243,34.69215364,0.741159926,59

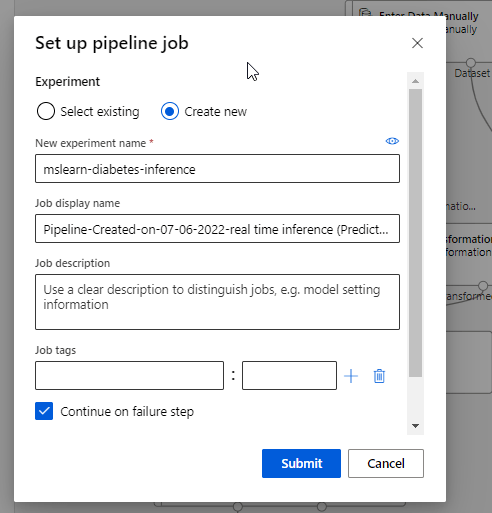

Now run the pipeline as a new experiment.

Once its finished we are now ready to publish the pipeline so that client applications can use it.

Under the Job detail section click the Deploy button and deploy a new real-time endpoint, using the following settings:

- Name: predict-diabetes

- Description: Classify diabetes

- Compute type: Azure Container Instance

After it finishes the deployment, navigate to Endpoints and run a Test.

You have just tested a service that is ready to be connected to a client application using the credentials in the Consume tab.